When deploying deep neural networks in real-world scenarios, adversarial robustness becomes a thorny issue. Although adversarial training appears to be a clever strategy for fortifying models, existing research tends to favor the controlled environment of "balanced datasets," overlooking the uneven, long-tailed distribution of real-world data. This oversight significantly diminishes the models' adversarial robustness in practical applications. In response to this challenge, Professor Liu Jian's research team proposed the TAET (Two-Stage Adversarial Equalization Training) framework. Their research findings were accepted and published in the prestigious international computer vision conference, the IEEE Conference on Computer Vision and Pattern Recognition (CVPR). In this publication, Wang Yuhang, a master's student from our school, is credited as the first author, with Professor Liu Jian serving as the corresponding author.

Paper Title: TAET: Two-Stage Adversarial Equalization Training on Long-Tailed Distributions

Authors: Wang Yu-Hang, Junkang Guo, Aolei Liu, Kaihao Wang, Zaitong Wu, Zhenyu Liu, Wenfei Yin, Jian Liu

Paper Link: https://arxiv.org/abs/2503.01924

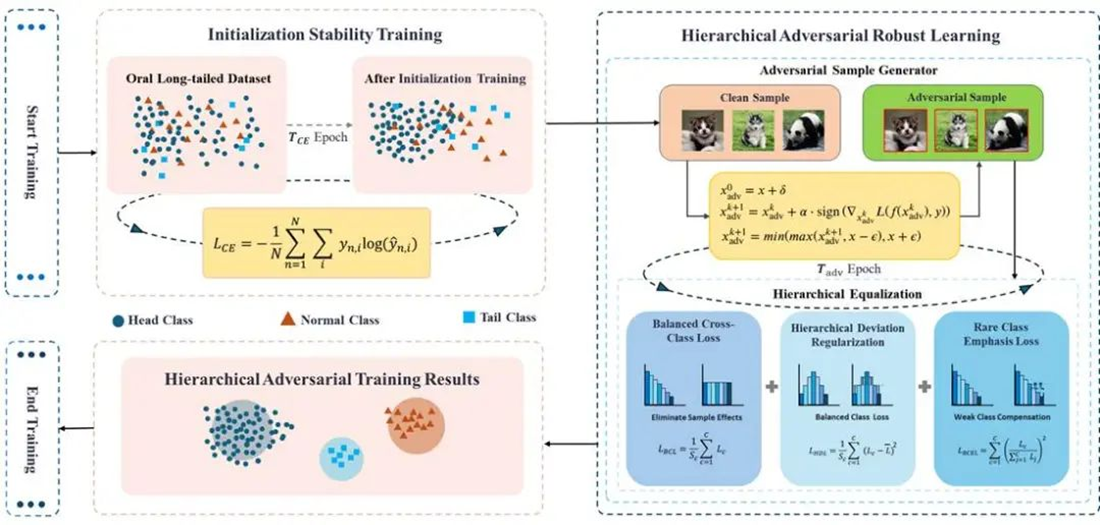

Figure 1. The TAET framework includes an Initial Stabilization Module (upper left) and the HARL module (right). The Initial Stabilization Module, based on cross-entropy loss, aims to stabilize accuracy in early training and transfers the trained model to HARL. Our HARL module consists of three components: BCL, HDL, and RCEL. A multi-step generation process creates perturbations (upper right), which are processed by normalization components (lower right).

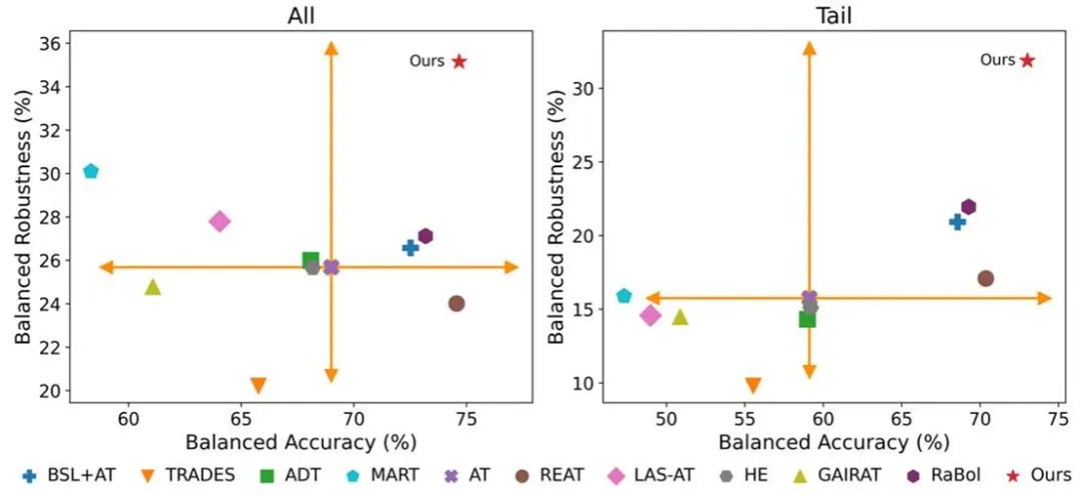

Figure 2. The evaluation includes both balanced accuracy and robustness, comparing long-tail recognition methods, adversarial training, state-of-the-art defenses, and our proposed approach. The results demonstrate that our method outperforms the others in both balanced accuracy and performance on weak classes.

Abstract: Adversarial robustness remains a significant challenge in deploying deep neural networks for real-world applications. While adversarial training is widely acknowledged as a promising defense strategy, most existing studies primarily focus on balanced datasets, neglecting the fact that real-world data often exhibit a long-tailed distribution, which introduces substantial challenges to robustness. In this paper, we provide an in-depth analysis of adversarial training in the context of long-tailed distributions and identify the limitations of the current state-of-the-art method, AT-BSL, in achieving robust performance under such conditions. To address these challenges, we propose a novel training framework, TAET, which incorporates an initial stabilization phase followed by a stratified, equalization adversarial training phase. Furthermore, prior work on long-tailed robustness has largely overlooked a crucial evaluation metric—balanced accuracy. To fill this gap, we introduce the concept of balanced robustness, a comprehensive metric that measures robustness specifically under long-tailed distributions. Extensive experiments demonstrate that our method outperforms existing advanced defenses, yielding significant improvements in both memory and computational efficiency. We believe this work represents a substantial step forward in tackling robustness challenges in real-world applications. Our paper code can be found at https://github.com/BuhuiOK/TAET-TwoStage-Adversarial-Equalization-Training-on-Long-TailedDistributions

CVPR is an internationally acclaimed CCF-A top-tier academic conference in the field of computer vision and pattern recognition. According to the 2024 Google Scholar Metrics, CVPR boasts an H5 index of 440 and an H5 median of 689, ranking it second globally. The acceptance of this paper also showcases the research prowess of our institute in the domain of trustworthy artificial intelligence.

TOP

TOP